How To Read Hdfs File In Pyspark

How To Read Hdfs File In Pyspark - Web from hdfs3 import hdfilesystem hdfs = hdfilesystem(host=host, port=port) hdfilesystem.rm(some_path) apache arrow python bindings are the latest option (and that often is already available on spark cluster, as it is required for pandas_udf): Navigate to / user / hdfs as below: Reading is just as easy as writing with the sparksession.read… Web 1.7k views 7 months ago. How can i read part_m_0000. Write and read parquet files in spark/scala. Web reading a file in hdfs from pyspark 50,701 solution 1 you could access hdfs files via full path if no configuration provided. Similarly, it will also access data node 3 to read the relevant data present in that node. Spark provides several ways to read.txt files, for example, sparkcontext.textfile () and sparkcontext.wholetextfiles () methods to read into rdd and spark.read.text () and spark.read.textfile () methods to read. To do this in the ambari console, select the “files view” (matrix icon at the top right).

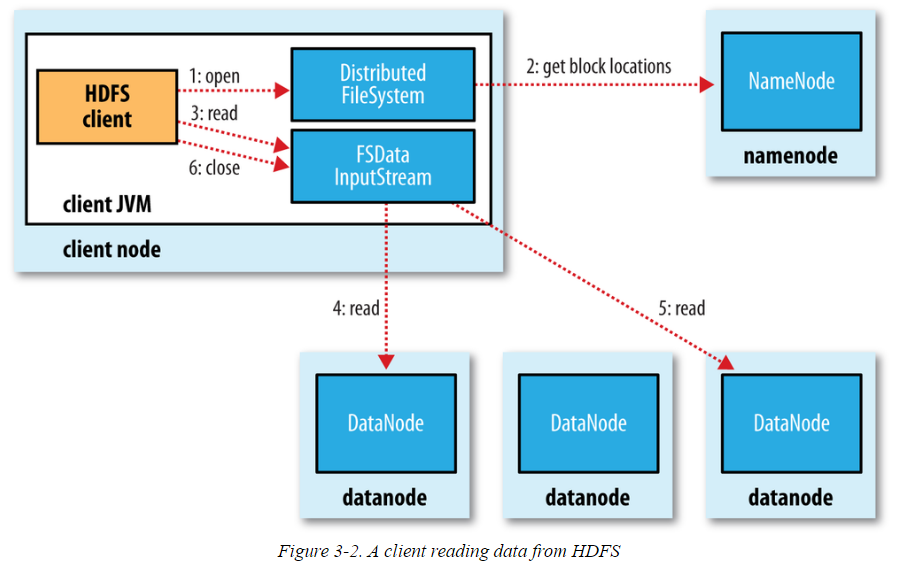

Code example this code only shows the first 20 records of the file. Web # read from hdfs df_load = sparksession.read.csv('hdfs://cluster/user/hdfs/test/example.csv') df_load.show() how to use on data fabric? Reading is just as easy as writing with the sparksession.read… Add the following code snippet to make it work from a jupyter notebook app in saagie: Web 1.7k views 7 months ago. Similarly, it will also access data node 3 to read the relevant data present in that node. Some exciting updates to our community! In this page, i am going to demonstrate how to write and read parquet files in hdfs… (namenodehost is your localhost if hdfs is located in local environment). Web the input stream will access data node 1 to read relevant information from the block located there.

How can i find path of file in hdfs. Web how to read a file from hdfs? Web the input stream will access data node 1 to read relevant information from the block located there. Import os os.environ [hadoop_user_name] = hdfs os.environ [python_version] = 3.5.2. In order to run any pyspark job on data fabric, you must package your python source file into a zip file. Spark provides several ways to read.txt files, for example, sparkcontext.textfile () and sparkcontext.wholetextfiles () methods to read into rdd and spark.read.text () and spark.read.textfile () methods to read. Web filesystem fs = filesystem. Web write & read json file from hdfs. Web how to write and read data from hdfs using pyspark | pyspark tutorial dwbiadda videos 14.2k subscribers 6k views 3 years ago pyspark tutorial for beginners welcome to dwbiadda's pyspark. Playing a file in hdfs with pyspark.

How to read CSV files using PySpark » Programming Funda

Set up the environment variables for pyspark… Web 1.7k views 7 months ago. The parquet file destination is a local folder. Web # read from hdfs df_load = sparksession.read.csv('hdfs://cluster/user/hdfs/test/example.csv') df_load.show() how to use on data fabric? (namenodehost is your localhost if hdfs is located in local environment).

Hadoop Distributed File System Apache Hadoop HDFS Architecture Edureka

Some exciting updates to our community! Web reading a file in hdfs from pyspark 50,701 solution 1 you could access hdfs files via full path if no configuration provided. Get a sneak preview here! Playing a file in hdfs with pyspark. In order to run any pyspark job on data fabric, you must package your python source file into a.

Anatomy of File Read and Write in HDFS

Some exciting updates to our community! Before reading the hdfs data, the hive metastore server has to be started as shown in. Write and read parquet files in spark/scala. Web from hdfs3 import hdfilesystem hdfs = hdfilesystem(host=host, port=port) hdfilesystem.rm(some_path) apache arrow python bindings are the latest option (and that often is already available on spark cluster, as it is required.

How to read an ORC file using PySpark

Good news the example.csv file is present. Web in this spark tutorial, you will learn how to read a text file from local & hadoop hdfs into rdd and dataframe using scala examples. Web the input stream will access data node 1 to read relevant information from the block located there. From pyarrow import hdfs fs = hdfs.connect(host, port) fs.delete(some_path,.

Using FileSystem API to read and write data to HDFS

Import os os.environ [hadoop_user_name] = hdfs os.environ [python_version] = 3.5.2. Code example this code only shows the first 20 records of the file. Web in my previous post, i demonstrated how to write and read parquet files in spark/scala. Read from hdfs # read from hdfs df_load = sparksession.read.csv ('hdfs://cluster/user/hdfs… Before reading the hdfs data, the hive metastore server has.

Reading HDFS files from JAVA program

Some exciting updates to our community! Reading csv file using pyspark: Web spark can (and should) read whole directories, if possible. Web the input stream will access data node 1 to read relevant information from the block located there. Good news the example.csv file is present.

How to read json file in pyspark? Projectpro

Web from hdfs3 import hdfilesystem hdfs = hdfilesystem(host=host, port=port) hdfilesystem.rm(some_path) apache arrow python bindings are the latest option (and that often is already available on spark cluster, as it is required for pandas_udf): Set up the environment variables for pyspark… From pyarrow import hdfs fs = hdfs.connect(host, port) fs.delete(some_path, recursive=true) Web # read from hdfs df_load = sparksession.read.csv('hdfs://cluster/user/hdfs/test/example.csv') df_load.show() how.

DBA2BigData Anatomy of File Read in HDFS

Playing a file in hdfs with pyspark. Reading csv file using pyspark: Web how to read and write files from hdfs with pyspark. Before reading the hdfs data, the hive metastore server has to be started as shown in. The parquet file destination is a local folder.

How to read json file in pyspark? Projectpro

Web table of contents recipe objective: Web spark can (and should) read whole directories, if possible. Web how to read and write files from hdfs with pyspark. The path is /user/root/etl_project, as you've shown, and i'm sure is also in your sqoop command. Web write & read json file from hdfs.

什么是HDFS立地货

How can i read part_m_0000. Add the following code snippet to make it work from a jupyter notebook app in saagie: Reading is just as easy as writing with the sparksession.read… Web table of contents recipe objective: From pyarrow import hdfs fs = hdfs.connect(host, port) fs.delete(some_path, recursive=true)

Web 1.7K Views 7 Months Ago.

Write and read parquet files in spark/scala. Web 1 answer sorted by: From pyarrow import hdfs fs = hdfs.connect(host, port) fs.delete(some_path, recursive=true) Web filesystem fs = filesystem.

The Path Is /User/Root/Etl_Project, As You've Shown, And I'm Sure Is Also In Your Sqoop Command.

Web the input stream will access data node 1 to read relevant information from the block located there. Web from hdfs3 import hdfilesystem hdfs = hdfilesystem(host=host, port=port) hdfilesystem.rm(some_path) apache arrow python bindings are the latest option (and that often is already available on spark cluster, as it is required for pandas_udf): In order to run any pyspark job on data fabric, you must package your python source file into a zip file. The parquet file destination is a local folder.

Web # Read From Hdfs Df_Load = Sparksession.read.csv('Hdfs://Cluster/User/Hdfs/Test/Example.csv') Df_Load.show() How To Use On Data Fabric?

Reading csv file using pyspark: Web table of contents recipe objective: Using spark.read.json (path) or spark.read.format (json).load (path) you can read a json file into a spark dataframe, these methods take a hdfs path as an argument. Code example this code only shows the first 20 records of the file.

Reading Is Just As Easy As Writing With The Sparksession.read…

In this page, i am going to demonstrate how to write and read parquet files in hdfs… Playing a file in hdfs with pyspark. Web reading a file in hdfs from pyspark 50,701 solution 1 you could access hdfs files via full path if no configuration provided. (namenodehost is your localhost if hdfs is located in local environment).