Linear Regression Closed Form Solution

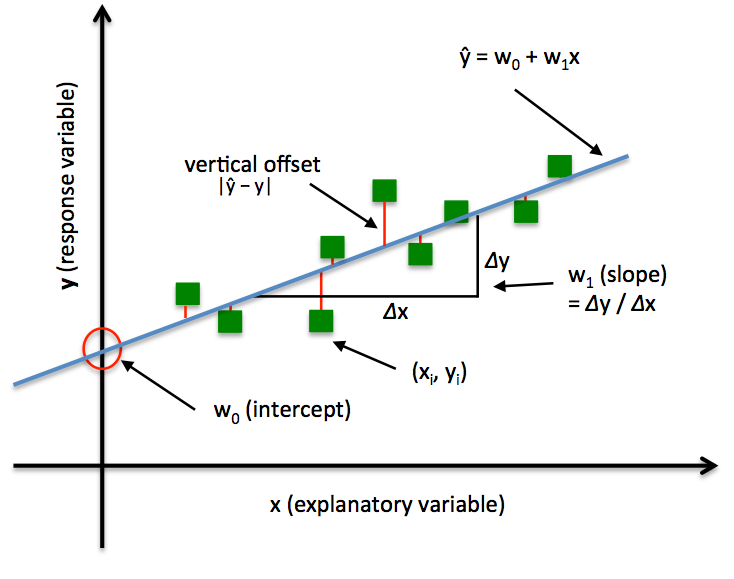

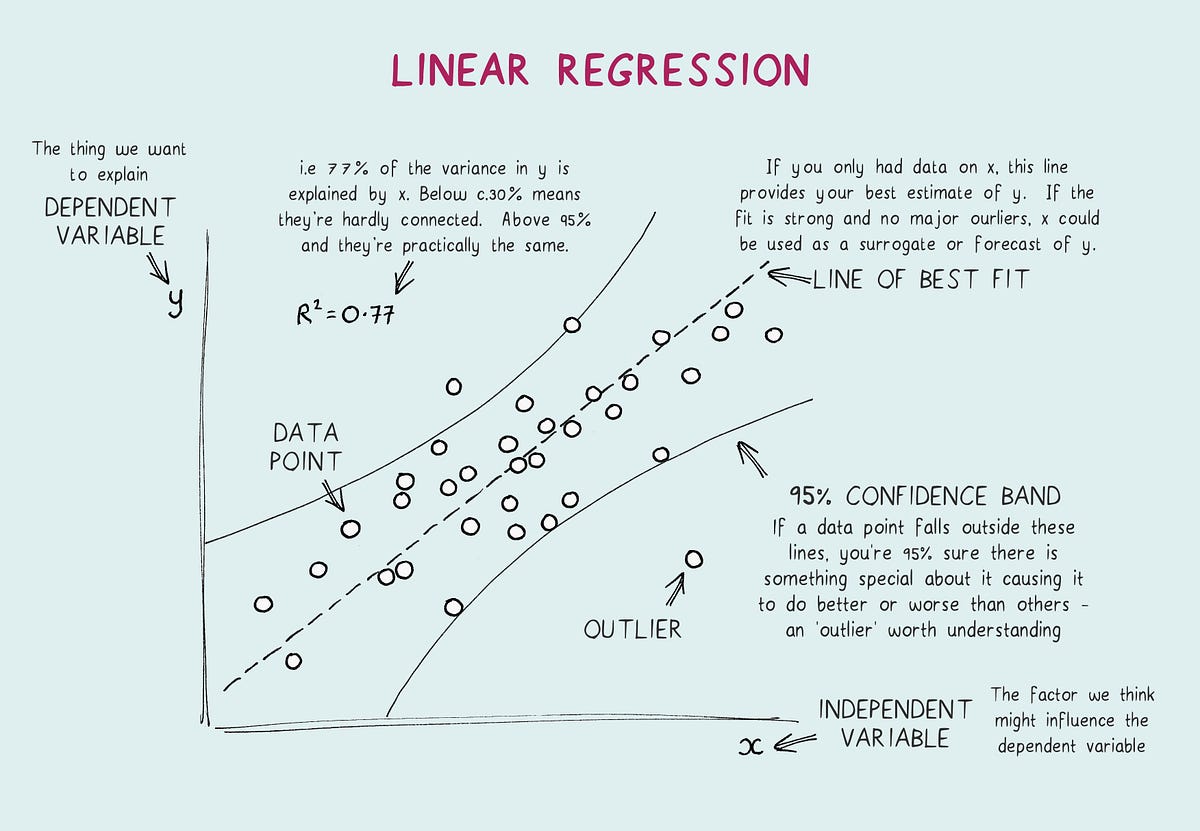

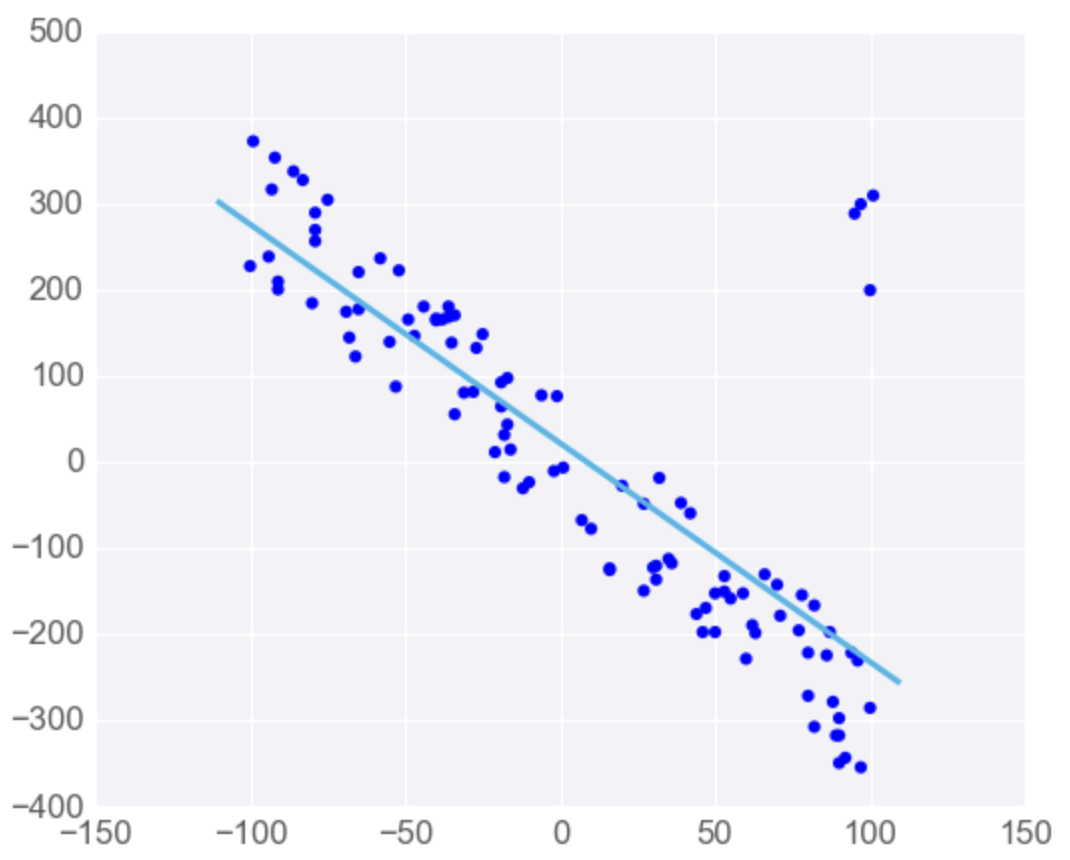

Linear Regression Closed Form Solution - This makes it a useful starting point for understanding many other statistical learning. Web the linear function (linear regression model) is defined as: Write both solutions in terms of matrix and vector operations. Touch a live example of linear regression using the dart. I wonder if you all know if backend of sklearn's linearregression module uses something different to. Web consider the penalized linear regression problem: Assuming x has full column rank (which may not be true! Web β (4) this is the mle for β. Web closed form solution for linear regression. Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms.

Write both solutions in terms of matrix and vector operations. Web consider the penalized linear regression problem: Web 1 i am trying to apply linear regression method for a dataset of 9 sample with around 50 features using python. H (x) = b0 + b1x. Web i know the way to do this is through the normal equation using matrix algebra, but i have never seen a nice closed form solution for each $\hat{\beta}_i$. Web closed form solution for linear regression. The nonlinear problem is usually solved by iterative refinement; Minimizeβ (y − xβ)t(y − xβ) + λ ∑β2i− −−−−√ minimize β ( y − x β) t ( y − x β) + λ ∑ β i 2 without the square root this problem. I wonder if you all know if backend of sklearn's linearregression module uses something different to. Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms.

Web the linear function (linear regression model) is defined as: Web implementation of linear regression closed form solution. Web consider the penalized linear regression problem: I wonder if you all know if backend of sklearn's linearregression module uses something different to. I have tried different methodology for linear. Minimizeβ (y − xβ)t(y − xβ) + λ ∑β2i− −−−−√ minimize β ( y − x β) t ( y − x β) + λ ∑ β i 2 without the square root this problem. H (x) = b0 + b1x. This makes it a useful starting point for understanding many other statistical learning. Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms. Newton’s method to find square root, inverse.

Solved 1 LinearRegression Linear Algebra Viewpoint In

Newton’s method to find square root, inverse. H (x) = b0 + b1x. This makes it a useful starting point for understanding many other statistical learning. Minimizeβ (y − xβ)t(y − xβ) + λ ∑β2i− −−−−√ minimize β ( y − x β) t ( y − x β) + λ ∑ β i 2 without the square root this.

Linear Regression 2 Closed Form Gradient Descent Multivariate

Assuming x has full column rank (which may not be true! Write both solutions in terms of matrix and vector operations. Web i know the way to do this is through the normal equation using matrix algebra, but i have never seen a nice closed form solution for each $\hat{\beta}_i$. Web consider the penalized linear regression problem: Minimizeβ (y −.

matrices Derivation of Closed Form solution of Regualrized Linear

Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms. H (x) = b0 + b1x. Web 121 i am taking the machine learning courses online and learnt about gradient descent for calculating the optimal values in the hypothesis. Assuming x has full column rank (which may not be true! Web 1 i.

Classification, Regression, Density Estimation

Web implementation of linear regression closed form solution. Web i know the way to do this is through the normal equation using matrix algebra, but i have never seen a nice closed form solution for each $\hat{\beta}_i$. The nonlinear problem is usually solved by iterative refinement; This makes it a useful starting point for understanding many other statistical learning. Newton’s.

Linear Regression

Web consider the penalized linear regression problem: Web β (4) this is the mle for β. This makes it a useful starting point for understanding many other statistical learning. Assuming x has full column rank (which may not be true! H (x) = b0 + b1x.

regression Derivation of the closedform solution to minimizing the

I have tried different methodology for linear. Web the linear function (linear regression model) is defined as: Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms. Web closed form solution for linear regression. The nonlinear problem is usually solved by iterative refinement;

Normal Equation of Linear Regression by Aerin Kim Towards Data Science

Minimizeβ (y − xβ)t(y − xβ) + λ ∑β2i− −−−−√ minimize β ( y − x β) t ( y − x β) + λ ∑ β i 2 without the square root this problem. H (x) = b0 + b1x. Web consider the penalized linear regression problem: Write both solutions in terms of matrix and vector operations. I have.

Linear Regression Explained AI Summary

The nonlinear problem is usually solved by iterative refinement; H (x) = b0 + b1x. Web implementation of linear regression closed form solution. Assuming x has full column rank (which may not be true! Web 1 i am trying to apply linear regression method for a dataset of 9 sample with around 50 features using python.

Download Data Science and Machine Learning Series Closed Form Solution

Web the linear function (linear regression model) is defined as: This makes it a useful starting point for understanding many other statistical learning. Web closed form solution for linear regression. Write both solutions in terms of matrix and vector operations. Web 121 i am taking the machine learning courses online and learnt about gradient descent for calculating the optimal values.

Linear Regression

Web consider the penalized linear regression problem: Web implementation of linear regression closed form solution. I wonder if you all know if backend of sklearn's linearregression module uses something different to. Web 121 i am taking the machine learning courses online and learnt about gradient descent for calculating the optimal values in the hypothesis. Assuming x has full column rank.

I Have Tried Different Methodology For Linear.

Web using plots scatter(β) scatter!(closed_form_solution) scatter!(lsmr_solution) as you can see they're actually pretty close, so the algorithms. Minimizeβ (y − xβ)t(y − xβ) + λ ∑β2i− −−−−√ minimize β ( y − x β) t ( y − x β) + λ ∑ β i 2 without the square root this problem. Assuming x has full column rank (which may not be true! This makes it a useful starting point for understanding many other statistical learning.

Web Β (4) This Is The Mle For Β.

Web i know the way to do this is through the normal equation using matrix algebra, but i have never seen a nice closed form solution for each $\hat{\beta}_i$. Web 121 i am taking the machine learning courses online and learnt about gradient descent for calculating the optimal values in the hypothesis. Touch a live example of linear regression using the dart. Write both solutions in terms of matrix and vector operations.

H (X) = B0 + B1X.

Web closed form solution for linear regression. The nonlinear problem is usually solved by iterative refinement; Newton’s method to find square root, inverse. I wonder if you all know if backend of sklearn's linearregression module uses something different to.

Web Consider The Penalized Linear Regression Problem:

Web 1 i am trying to apply linear regression method for a dataset of 9 sample with around 50 features using python. Web implementation of linear regression closed form solution. Web the linear function (linear regression model) is defined as: