Read Delta Table Into Dataframe Pyspark

Read Delta Table Into Dataframe Pyspark - Databricks uses delta lake for all tables by default. To load a delta table into a pyspark dataframe, you can use the. Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. This tutorial introduces common delta lake operations on databricks, including the following: If the delta lake table is already stored in the catalog (aka. Web pyspark load a delta table into a dataframe. # read file(s) in spark data. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web create a dataframe with some range of numbers. Azure databricks uses delta lake for all tables by default.

Web in python, delta live tables determines whether to update a dataset as a materialized view or streaming table. To load a delta table into a pyspark dataframe, you can use the. # read file(s) in spark data. From pyspark.sql.types import * dt1 = (. If the delta lake table is already stored in the catalog (aka. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important. In the yesteryears of data management, data warehouses reigned supreme with their. Web is used a little py spark code to create a delta table in a synapse notebook. This tutorial introduces common delta lake operations on databricks, including the following:

It provides code snippets that show how to. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web read a delta lake table on some file system and return a dataframe. If the delta lake table is already stored in the catalog (aka. Dataframe.spark.to_table () is an alias of dataframe.to_table (). To load a delta table into a pyspark dataframe, you can use the. Web write the dataframe out as a delta lake table. Web write the dataframe into a spark table. You can easily load tables to. Web read a delta lake table on some file system and return a dataframe.

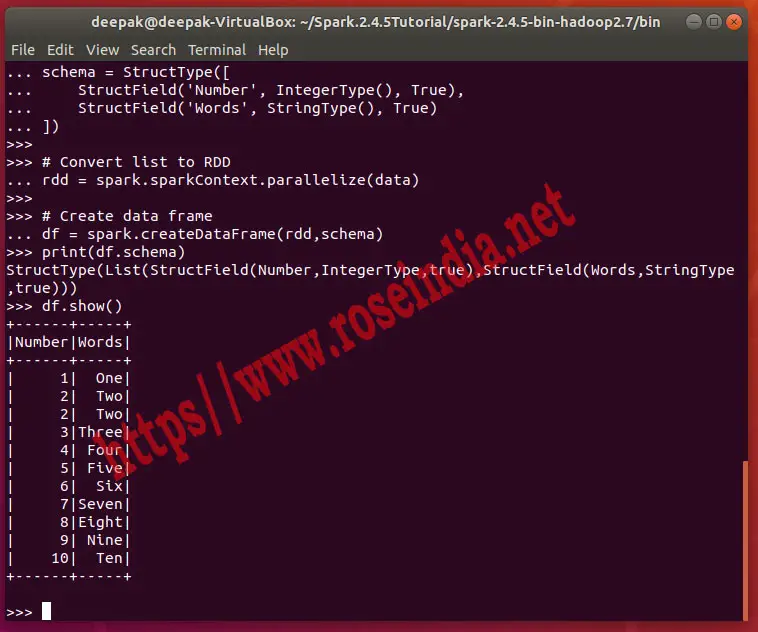

With PySpark read list into Data Frame

You can easily load tables to. To load a delta table into a pyspark dataframe, you can use the. Web read a delta lake table on some file system and return a dataframe. Web read a spark table and return a dataframe. From pyspark.sql.types import * dt1 = (.

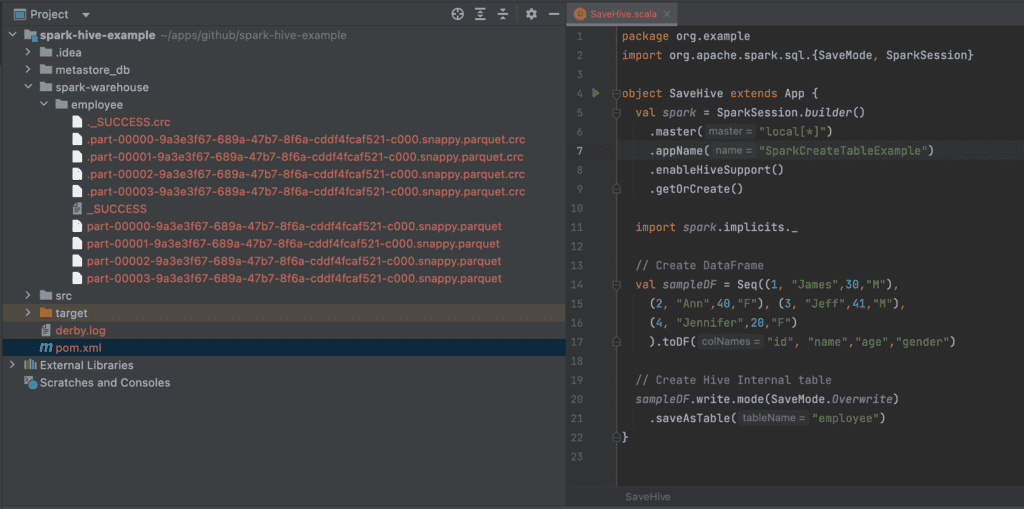

Spark SQL Read Hive Table Spark By {Examples}

Web read a table into a dataframe. If the delta lake table is already stored in the catalog (aka. If the schema for a delta table. Web read a spark table and return a dataframe. If the delta lake table is already stored in the catalog (aka.

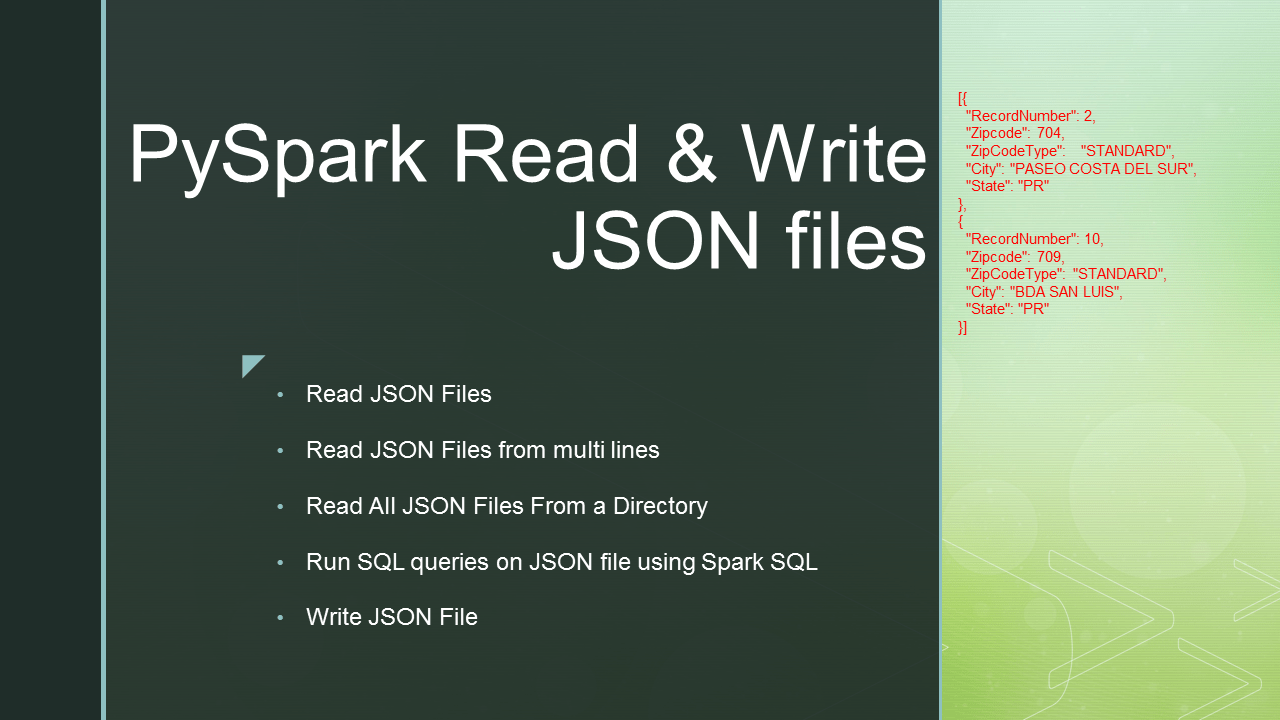

PySpark Read JSON file into DataFrame Blockchain & Web development

Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. If the delta lake table is already stored in the catalog (aka. Web write the dataframe into a spark table. If the schema for a delta table. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important.

Losing data formats when saving Spark dataframe to delta table in Azure

Dataframe.spark.to_table () is an alias of dataframe.to_table (). Web here’s how to create a delta lake table with the pyspark api: Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important. Web read a delta lake table on some file system and return a dataframe. Web read a delta lake table on some file system and return a dataframe.

How to Read CSV File into a DataFrame using Pandas Library in Jupyter

Web june 05, 2023. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Index_colstr or list of str, optional,. You can easily load tables to. Azure databricks uses delta lake for all tables by default.

Read Parquet File In Pyspark Dataframe news room

Azure databricks uses delta lake for all tables by default. If the delta lake table is already stored in the catalog (aka. Web pyspark load a delta table into a dataframe. This guide helps you quickly explore the main features of delta lake. Web import io.delta.implicits._ spark.readstream.format(delta).table(events) important.

PySpark Create DataFrame with Examples Spark by {Examples}

It provides code snippets that show how to. In the yesteryears of data management, data warehouses reigned supreme with their. Web pyspark load a delta table into a dataframe. # read file(s) in spark data. If the schema for a.

68. Databricks Pyspark Dataframe InsertInto Delta Table YouTube

This tutorial introduces common delta lake operations on databricks, including the following: Azure databricks uses delta lake for all tables by default. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Databricks uses delta lake for all tables by default. # read file(s) in spark data.

PySpark Pivot and Unpivot DataFrame Pivot table, Column, Example

This guide helps you quickly explore the main features of delta lake. If the delta lake table is already stored in the catalog (aka. Web write the dataframe out as a delta lake table. If the delta lake table is already stored in the catalog (aka. To load a delta table into a pyspark dataframe, you can use the.

How to parallelly merge data into partitions of databricks delta table

Dataframe.spark.to_table () is an alias of dataframe.to_table (). This tutorial introduces common delta lake operations on databricks, including the following: Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. Web write the dataframe out as a delta lake table. Web read a table into a dataframe.

Web Read A Delta Lake Table On Some File System And Return A Dataframe.

In the yesteryears of data management, data warehouses reigned supreme with their. Web import io.delta.implicits._ spark.readstream.format (delta).table (events) important. This guide helps you quickly explore the main features of delta lake. You can easily load tables to.

If The Delta Lake Table Is Already Stored In The Catalog (Aka.

Web june 05, 2023. Index_colstr or list of str, optional,. Web pyspark load a delta table into a dataframe. Web is used a little py spark code to create a delta table in a synapse notebook.

Web Import Io.delta.implicits._ Spark.readstream.format(Delta).Table(Events) Important.

Web here’s how to create a delta lake table with the pyspark api: Azure databricks uses delta lake for all tables by default. Web read a table into a dataframe. To load a delta table into a pyspark dataframe, you can use the.

Databricks Uses Delta Lake For All Tables By Default.

If the schema for a. It provides code snippets that show how to. Dataframe.spark.to_table () is an alias of dataframe.to_table (). From pyspark.sql.types import * dt1 = (.