Read Parquet File Pyspark

Read Parquet File Pyspark - Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Web i want to read a parquet file with pyspark. Web i use the following two ways to read the parquet file: From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Parquet is a columnar format that is supported by many other data processing systems. Spark sql provides support for both reading and. Web pyspark read parquet file into dataframe. I wrote the following codes. Optionalprimitivetype) → dataframe [source] ¶. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \.

Spark sql provides support for both reading and. Optionalprimitivetype) → dataframe [source] ¶. Parquet is a columnar format that is supported by many other data processing systems. I wrote the following codes. Web i want to read a parquet file with pyspark. Web i use the following two ways to read the parquet file: From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Web pyspark read parquet file into dataframe.

Spark sql provides support for both reading and. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Web i want to read a parquet file with pyspark. Web pyspark read parquet file into dataframe. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Optionalprimitivetype) → dataframe [source] ¶. Parquet is a columnar format that is supported by many other data processing systems. Web i use the following two ways to read the parquet file: From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. I wrote the following codes.

Python How To Load A Parquet File Into A Hive Table Using Spark Riset

I wrote the following codes. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Spark sql provides support for both reading and. Web pyspark read parquet file into dataframe. Web i use the following two ways to read the parquet file:

How To Read A Parquet File Using Pyspark Vrogue

I wrote the following codes. Web pyspark read parquet file into dataframe. Optionalprimitivetype) → dataframe [source] ¶. Parquet is a columnar format that is supported by many other data processing systems. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \.

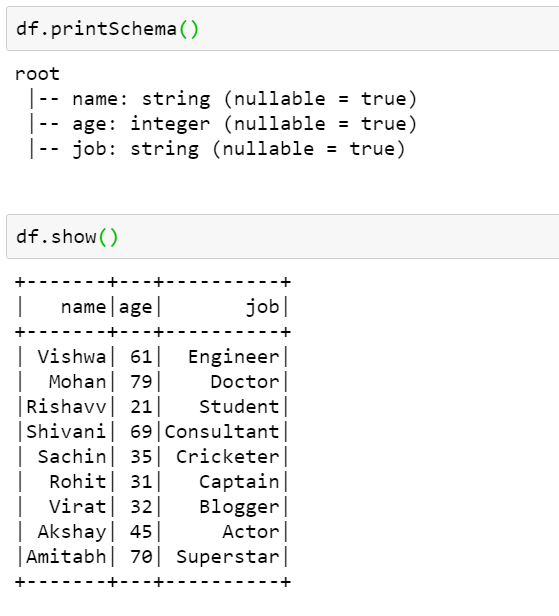

How to read a Parquet file using PySpark

From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Parquet is a columnar format that is supported by many other data processing systems. Web pyspark read parquet file into dataframe. Spark sql provides support for both reading and. I wrote the following codes.

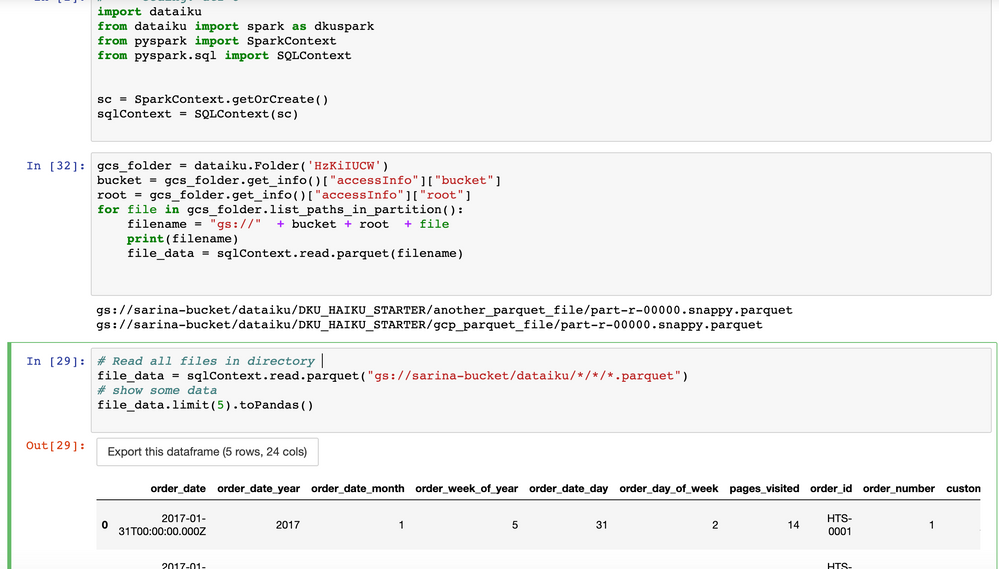

Solved How to read parquet file from GCS using pyspark? Dataiku

From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Web i use the following two ways to read the parquet file: Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Parquet is a columnar format that is supported by many other data processing systems. Optionalprimitivetype) → dataframe [source] ¶.

PySpark Tutorial 9 PySpark Read Parquet File PySpark with Python

Spark sql provides support for both reading and. Parquet is a columnar format that is supported by many other data processing systems. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Optionalprimitivetype) → dataframe [source] ¶.

pd.read_parquet Read Parquet Files in Pandas • datagy

Optionalprimitivetype) → dataframe [source] ¶. Web pyspark read parquet file into dataframe. Spark sql provides support for both reading and. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Parquet is a columnar format that is supported by many other data processing systems.

Read Parquet File In Pyspark Dataframe news room

Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Parquet is a columnar format that is supported by many other data processing systems. I wrote the following codes. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \.

How to resolve Parquet File issue

Web i use the following two ways to read the parquet file: Optionalprimitivetype) → dataframe [source] ¶. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Web i want to read a parquet file with pyspark.

How To Read Various File Formats In Pyspark Json Parquet Orc Avro Www

Web i want to read a parquet file with pyspark. I wrote the following codes. Parquet is a columnar format that is supported by many other data processing systems. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe. Optionalprimitivetype) → dataframe [source] ¶.

PySpark Read and Write Parquet File Spark by {Examples}

From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Optionalprimitivetype) → dataframe [source] ¶. Spark sql provides support for both reading and. I wrote the following codes. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe.

Web Pyspark Read Parquet File Into Dataframe.

Parquet is a columnar format that is supported by many other data processing systems. From pyspark.sql import sqlcontext sqlcontext = sqlcontext(sc). I wrote the following codes. Pyspark provides a parquet () method in dataframereader class to read the parquet file into dataframe.

Web I Use The Following Two Ways To Read The Parquet File:

Optionalprimitivetype) → dataframe [source] ¶. Spark sql provides support for both reading and. From pyspark.sql import sparksession spark = sparksession.builder \.master('local') \. Web i want to read a parquet file with pyspark.